OP Succinct

Overview

OP Succinct gives every OP Stack rollup the ability to become a ZK rollup.

It combines a few key technologies:

- Kona, Optimism's Rust implementation of the OP Stack's state transition function

- SP1, Succinct's state-of-the-art Rust zkVM

- Succinct Prover Network, Succinct's low-latency, cost-effective proving API

OP Succinct is the only production-ready proving solution for the OP Stack and trusted by teams like Mantle and Phala.

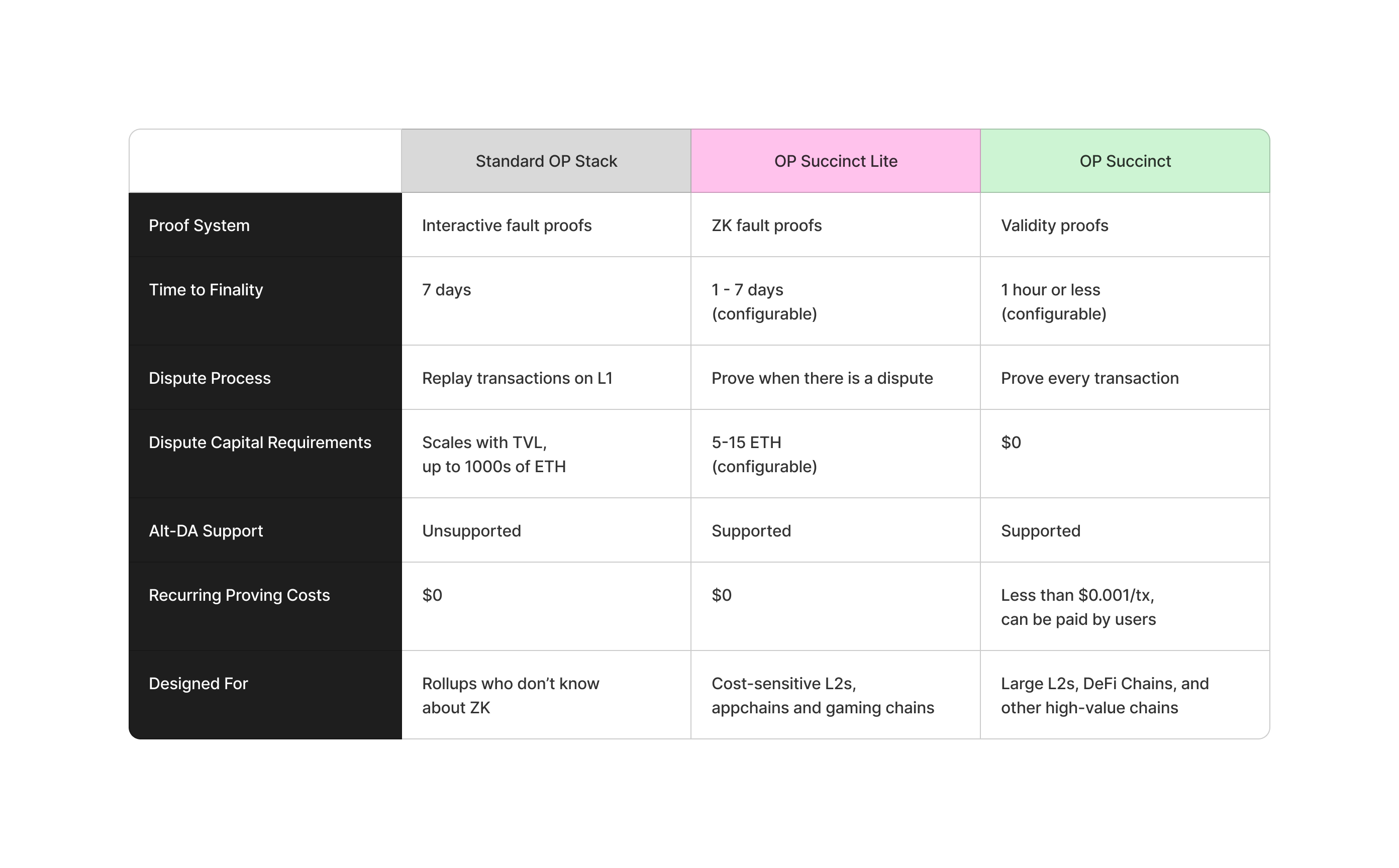

Proving Options

Rollups can choose between two configurations:

- ZK fault proofs (OP Succinct Lite) — only generate a zero-knowledge proof when there is a dispute

- Validity proofs (OP Succinct) — generate a zero-knowledge proof for every transaction, eliminating disputes entirely

Both configurations offer meaningful advantages over the standard OP Stack design.

Support and Community

All of this has been possible thanks to close collaboration with the core team at OP Labs.

Ready to upgrade your rollup with ZK? Contact us to get started with OP Succinct.

Architecture

System Overview

OP Succinct is a lightweight upgrade to the OP Stack that enables ZK-based finality.

This document explains the standard OP Stack components and the lightweight modifications OP Succinct adds to enable proving blocks with zero-knowledge proofs using SP1.

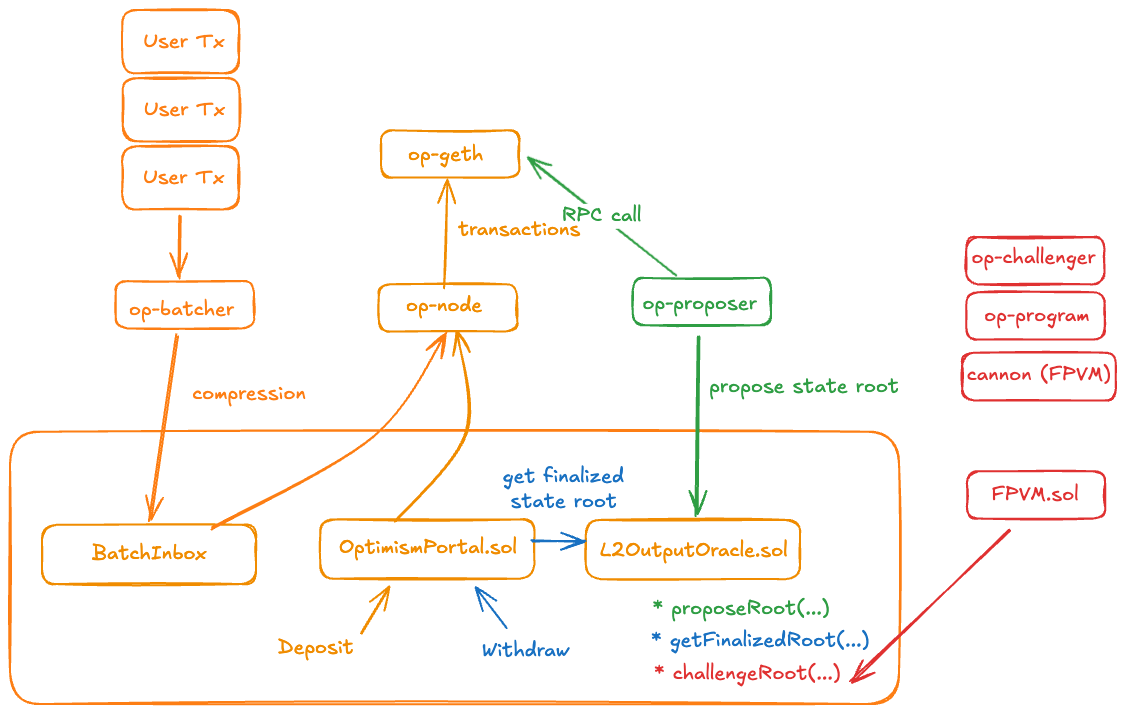

Standard OP Stack Design

In the specification of the standard OP Stack design, there are 4 main components.

- OP Geth: Execution engine for the L2.

- OP Batcher: Collects and batches users transactions efficiently and posts to L1.

- OP Node: Reads batch data from L1, and passes to OP Geth to perform state transitions.

- OP Proposer: Posts state roots from OP Node to L1. Enables withdrawal processing.

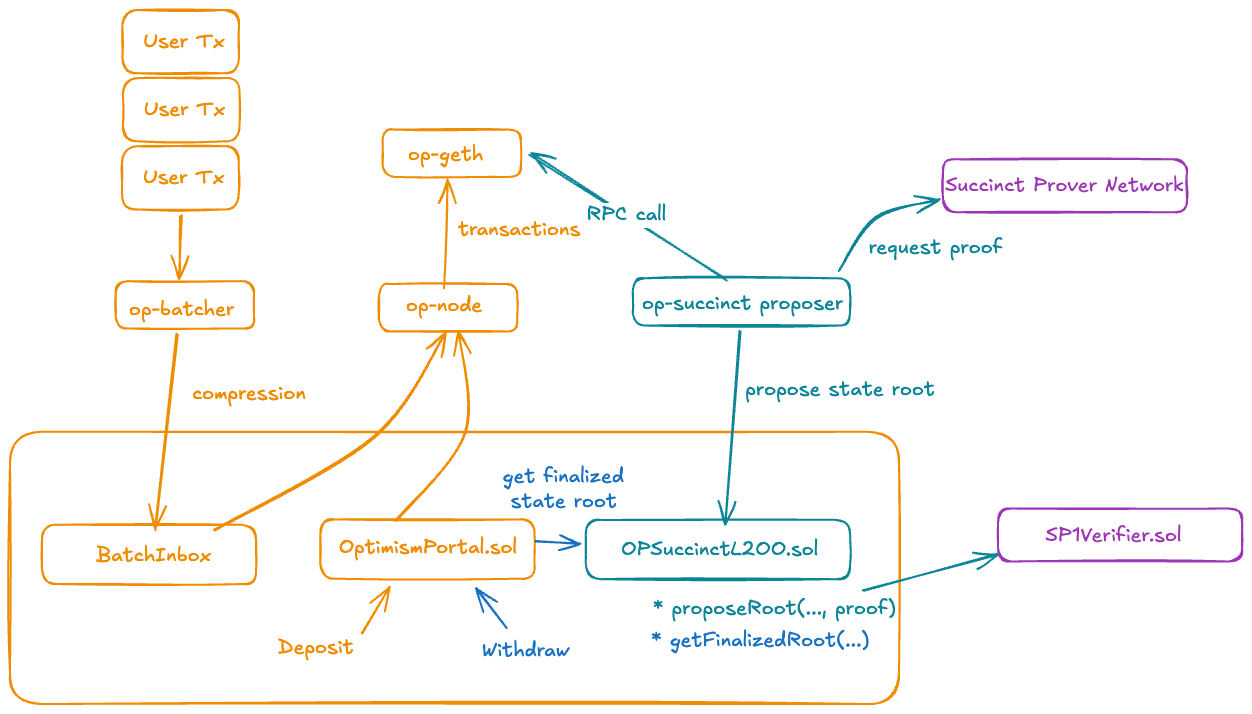

OP Succinct Design

Overview

OP Succinct is a lightweight upgrade to the OP Stack that enables ZK-based finality. Specifically, it upgrades a single on-chain contract and the op-proposer component. No changes are needed to op-geth, op-batcher, or op-node.

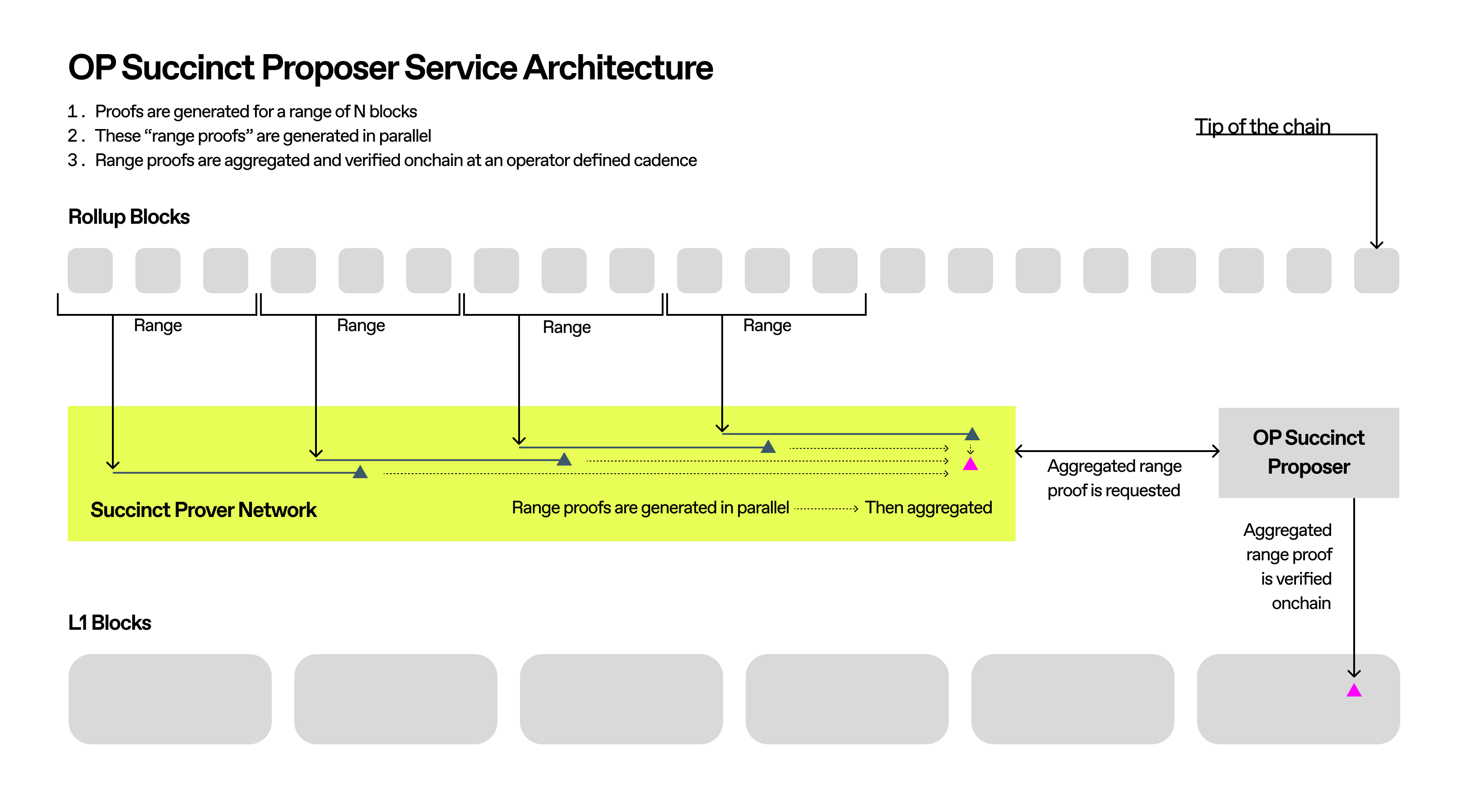

Service Architecture

The OP Succinct Proposer is a new service that orchestrates the proving pipeline. It monitors L1 for posted batches, generates proofs, and submits them to L1 with ZK proofs.

- User transactions are processed by standard OP Stack components.

- Validity proofs are generated for ranges of blocks with the range program.

- Combine range proofs into a single aggregationproof that is cheaply verifiable on-chain.

- OP Succinct proposer submits aggregation proof to the on-chain contract.

- The on-chain contract verifies the proof and updates the L2 state root for withdrawals.

Repository Structure

The OP Succinct repository structure is as follows:

op-succinct

├── contracts # Smart contracts for OP Succinct

│ ├── validity # Validity contracts

│ ├── fp # Fault proof contracts

├── validity # Validity proposer and challenger implementation

├── fault-proof # Fault proof system implementation

├── programs # Range and aggregation program implementations

├── utils # Shared utilities

│ ├── client # Client-side utilities

│ ├── host # Host-side utilities

│ └── build # Build utilities

├── scripts # Development and deployment scripts

└── elf # Reproducible binaries

OP Succinct (Validity)

Quick Start

This guide will walk you through the steps to deploy OP Succinct for your OP Stack chain. By the end of this guide, you will have a deployed smart contract on L1 that is tracking the state of your OP Stack chain with mock SP1 proofs and Ethereum as the data availability layer.

For OP Stack chain with alternative DA layers, please refer to the Experimental Features section.

Prerequisites

- Compatible RPCs. If you don't have these already, see Node Setup for more information.

- L1 Archive Node (

L1_RPC) - L2 Execution Node (

L2_RPC) - L2 Rollup Node (

L2_NODE_RPC)

- L1 Archive Node (

- Foundry

- Docker

- Rust

- Just

On Ubuntu, you'll need some system dependencies to run the service:

sudo apt update && sudo apt install -y \

curl clang pkg-config libssl-dev ca-certificates git libclang-dev jq build-essential

Step 1: Clone and build contracts

Clone the repository and build the contracts:

git clone https://github.com/succinctlabs/op-succinct.git

cd op-succinct/contracts

forge build

cd ..

Step 2: Configure environment

In the root directory, create a file called .env and set the following environment variables:

# Required

L1_RPC=<YOUR_L1_RPC_URL> # L1 Archive Node

L2_RPC=<YOUR_L2_RPC_URL> # L2 Execution Node (op-geth)

L2_NODE_RPC=<YOUR_L2_NODE_RPC_URL> # L2 Rollup Node (op-node)

PRIVATE_KEY=<YOUR_PRIVATE_KEY> # Private key for deploying contracts

ETHERSCAN_API_KEY=<YOUR_ETHERSCAN_API_KEY> # For verifying deployed contracts

# Optional

L1_BEACON_RPC=<YOUR_L1_BEACON_RPC_URL> # L1 Beacon Node (for integrations that access consensus-layer data)

Obtaining a Test Private Key

-

Anvil (local devnet): Run

anviland use one of the pre-funded accounts printed on startup. Copy the Private Key value for any account. Only use these on your local Anvil network. -

Foundry (generate a fresh key): Run

cast wallet newto generate a human-readable output. Save the private key and fund it on your test network.

⚠️ Caution: Never use test keys on mainnet or with real assets.

Step 3: Deploy contracts

Deploy an SP1MockVerifier for verifying mock proofs

Deploy an SP1MockVerifier for verifying mock proofs by running the following command:

% just deploy-mock-verifier

[⠊] Compiling...

[⠑] Compiling 1 files with Solc 0.8.15

[⠘] Solc 0.8.15 finished in 615.84ms

Compiler run successful!

Script ran successfully.

== Return ==

0: address 0x4cb20fa9e6FdFE8FDb6CE0942c5f40d49c898646

....

In these deployment logs, 0x4cb20fa9e6FdFE8FDb6CE0942c5f40d49c898646 is the address of the SP1MockVerifier contract.

Add the address to your .env file:

VERIFIER_ADDRESS=<SP1_MOCK_VERIFIER_ADDRESS> # Address of the SP1MockVerifier contract

Deploy the OPSuccinctL2OutputOracle contract

This contract is a modification of Optimism's L2OutputOracle contract which verifies a proof along with the proposed state root.

Deploy the contract by running the following command. This command automatically fetches and stores the rollup configuration, loads the required environment variables, and executes the Foundry deployment script, optionally verifying the contract on Etherscan:

% just deploy-oracle

...

== Return ==

0: address 0xde4656D4FbeaC0c0863Ab428727e3414Fa251A4C

In these deployment logs, 0xde4656D4FbeaC0c0863Ab428727e3414Fa251A4C is the address of the proxy for the OPSuccinctL2OutputOracle contract. This deployed proxy contract is used to track the verified state roots of the OP Stack chain on L1.

Step 4: Run the service

Add the following parameters to the .env file:

L2OO_ADDRESS=<OPSUCCINCT_L2_OUTPUT_ORACLE_ADDRESS> # Address of the OPSuccinctL2OutputOracle proxy

OP_SUCCINCT_MOCK=true # Set to true to generate mock proofs

When running just the proposer, you won't need the ETHERSCAN_API_KEY or VERIFIER_ADDRESS environment variables. These are only required for contract deployment.

Build the Docker Compose setup

docker compose build

Run the Proposer

This command launches the op-succinct in the background.

After a few minutes, you should see the OP Succinct proposer start to generate mock range proofs. Once enough range proofs have been generated, a mock aggregation proof will be created and submitted to the L1.

docker compose up

To see the logs of the op-succinct service, run:

docker compose logs -f

To stop the op-succinct service, run:

docker compose stop

Contract Management

This section will show you how to configure and deploy the on-chain contracts required for OP Succinct in validity mode.

| Contract | Description |

|---|---|

OPSuccinctL2OutputOracle.sol | Modified L2OutputOracle.sol with validity proof verification. Compatible with OptimismPortal.sol. |

OPSuccinctDisputeGame.sol | Validity proof verification contract implementing IDisputeGame.sol interface for OptimismPortal2.sol. Wraps the Oracle contract. |

Environment Variables

This section will show you how to configure the environment variables required to deploy the OPSuccinctL2OutputOracle contract.

Required Parameters

When deploying or upgrading the OPSuccinctL2OutputOracle contract, the following parameters are required to be set in your .env file:

| Parameter | Description |

|---|---|

L1_RPC | L1 Archive Node. |

L2_RPC | L2 Execution Node (op-geth). |

L2_NODE_RPC | L2 Rollup Node (op-node). |

PRIVATE_KEY | Private key for the account that will be deploying the contract. |

ETHERSCAN_API_KEY | Etherscan API key used for verifying the contract (optional). |

Optional Advanced Parameters

You can configure additional parameters when deploying or upgrading the OPSuccinctL2OutputOracle contract in your .env file.

| Parameter | Description |

|---|---|

L1_BEACON_RPC | L1 Consensus (Beacon) Node. Could be required for integrations that access consensus-layer data. |

VERIFIER_ADDRESS | Default: Succinct's official Plonk VerifierGateway. Address of the ISP1Verifier contract used to verify proofs. For mock proofs, this should be the address of the SP1MockVerifier contract. |

SUBMISSION_INTERVAL | Default: 10. The minimum interval in L2 blocks for a proof to be submitted. An aggregation proof can be posted for any range larger than this interval. |

FINALIZATION_PERIOD_SECS | Default: 3600 (1 hour). The time period (in seconds) after which a proposed output becomes finalized and withdrawals can be processed. |

FALLBACK_TIMEOUT_SECS | Default: 1209600 (2 weeks). The time period (in seconds) after which the system falls back to permissionless mode if no valid proposal has been submitted. Only used in permissioned mode. |

STARTING_BLOCK_NUMBER | Default: The finalized block number on L2. The block number to initialize the contract from. OP Succinct will start proving state roots from this block number. |

PROPOSER | Default: The address of the account associated with PRIVATE_KEY. If PRIVATE_KEY is not set, address(0). An Ethereum address authorized to submit proofs. Set to address(0) to allow permissionless submissions. Note: Use addProposer and removeProposer functions to update the list of approved proposers. |

CHALLENGER | Default: The address of the account associated with PRIVATE_KEY. If PRIVATE_KEY is not set, address(0). Ethereum address authorized to dispute proofs. Set to address(0) for no challenging. |

OWNER | Default: The address of the account associated with PRIVATE_KEY. If PRIVATE_KEY is not set, address(0). Ethereum address authorized to update the aggregationVkey, rangeVkeyCommitment, verifier, and rollupConfigHash parameters. Can also transfer ownership of the contract and |

DEPLOY_PK | Default: The address of the account associated with PRIVATE_KEY. The private key of the account that will be deploying the contract. |

ADMIN_PK | Default: The address of the account associated with PRIVATE_KEY. The private key of the account that will be deploying the contract. |

PROXY_ADMIN | Default: address(0). The address of the L1 ProxyAdmin contract used to manage the OPSuccinctL2OutputOracle proxy. More information can be found here. |

OP_SUCCINCT_L2_OUTPUT_ORACLE_IMPL | Default: address(0). The address of an already-deployed OPSuccinctL2OutputOracle implementation contract. If this is not set, a new OPSuccinctL2OutputOracle implementation contract will be deployed. |

Deploying OPSuccinctL2OutputOracle

Similar to the L2OutputOracle contract, the OPSuccinctL2OutputOracle is managed via an upgradeable proxy. Follow the instructions below to deploy the contract.

Prerequisites

- Foundry

- Configured RPCs. If you don't have these already, see Node Setup for more information.

1. Pull the version of OPSuccinctL2OutputOracle you want to deploy

Check out the latest release of op-succinct from here.

2. Configure your environment

First, ensure that you have the correct environment variables set in your .env file. See the Environment Variables section for more information.

3. Deploy OPSuccinctL2OutputOracle

To deploy the OPSuccinctL2OutputOracle contract, run the following command in /contracts.

just deploy-oracle

Optionally, you can pass the environment file you want to use to the command.

just deploy-oracle .env.example

This will deploy the OPSuccinctL2OutputOracle contract using the parameters in the .env.example file.

You will see the following output. The contract address that should be used is the proxy address.

% just deploy-oracle .env.example

Finished `release` profile [optimized] target(s) in 0.40s

Running `target/release/fetch-rollup-config --env-file .env.example`

[⠊] Compiling...

No files changed, compilation skipped

Script ran successfully.

== Return ==

0: address 0xa8A51b0a66FF2ee852a633cC2D59B6C1b47c7f00

...

In the logs above, the proxy address is 0xa8A51b0a66FF2ee852a633cC2D59B6C1b47c7f00.

Updating OPSuccinctL2OutputOracle Parameters

OP Succinct supports a rolling update process when program binaries must be reproduced and only the aggregationVkey, rangeVkeyCommitment or rollupConfigHash parameters change. For example, this could happen if

- The SP1 version changes

- An optimization to the range program is released

- Some L2 parameters change

Rolling update guide

- Generate new elfs, vkeys, and a rollup config hash by following this guide.

- From the project's root, run

just add_config my_upgrade.- This will automatically fetch the

aggregationVkeyandrangeVkeyCommitmentfrom the/elfdirectory, and therollupConfigHashfrom theL2_RPCset in the.env. The output will look like the following:

- This will automatically fetch the

$ just add-config my_upgrade

...

== Logs ==

Added OpSuccinct config: my_upgrade

## Setting up 1 EVM.

==========================

Chain 11155111

Estimated gas price: 0.002818893 gwei

Estimated total gas used for script: 147070

Estimated amount required: 0.00000041457459351 ETH

==========================

##### sepolia

✅ [Success] Hash: 0xa87279416385a17518f8cc27a28fa43432b1bf7dba6a1983cdf5146220a4ec7a

Block: 8570449

Paid: 0.00000020644771056 ETH (100561 gas * 0.00205296 gwei)

✅ Sequence #1 on sepolia | Total Paid: 0.00000020644771056 ETH (100561 gas * avg 0.00205296 gwei)

==========================

ONCHAIN EXECUTION COMPLETE & SUCCESSFUL.

...

- Spin up a new proposer with the

OP_SUCCINCT_CONFIG_NAMEenvironment variable set to the name of the config you added. For this example, you would setOP_SUCCINCT_CONFIG_NAME="my_upgrade"in your.envfile. - Shut down your old proposer.

- For security, delete your old

OpSuccinctConfigby runningjust remove-config old_config.

Using an EOA admin key

If you are the owner of the OPSuccinctL2OutputOracle contract, you can set ADMIN_PK in your .env to directly add and remove configurations. If unset, this will default to the value of PRIVATE_KEY.

Updating Parameters with a non-EOA ADMIN_PK

If the owner of the OPSuccinctL2OutputOracle is not an EOA (e.g. multisig, contract), set EXECUTE_UPGRADE_CALL to false in your .env file. This will output the raw calldata for the parameter update calls, which can be executed by the owner in a separate context.

| Parameter | Description |

|---|---|

EXECUTE_UPGRADE_CALL | Set to false to output the raw calldata for the parameter update calls. |

Then, run the following command from the project root.

$ just add-config new_config

...

== Logs ==

The calldata for adding the OP Succinct configuration is:

0x47c37e9c1614abfc873fd38dcc6705b30385...

Warning: No transactions to broadcast.

Upgrading OPSuccinctL2OutputOracle

Similar to the L2OutputOracle contract, the OPSuccinctL2OutputOracle is managed via an upgradeable proxy. The upgrade process is the same as the L2OutputOracle contract.

1. Decide on the target OPSuccinctL2OutputOracle contract code

(Recommended) Using OPSuccinctL2OutputOracle from a release

Check out the latest release of op-succinct from here. You can always find the latest version of the OPSuccinctL2OutputOracle on the latest release.

Manual Changes to OPSuccinctL2OutputOracle

If you want to manually upgrade the OPSuccinctL2OutputOracle contract, follow these steps:

-

Make the relevant changes to the

OPSuccinctL2OutputOraclecontract. -

Then, manually bump the

initializerVersionversion in theOPSuccinctL2OutputOraclecontract. You will need to manually bump theinitializerVersionversion in theOPSuccinctL2OutputOraclecontract to keep track of the upgrade history.

// Original initializerVersion

uint256 public constant initializerVersion = 1;

// Increment the initializerVersion to 2.

uint256 public constant initializerVersion = 2;

2. Configure your environment

Ensure that you have the correct environment variables set in your environment file. See the Environment Variables section for more information.

3. Upgrade the OPSuccinctL2OutputOracle contract

Get the address of the existing OPSuccinctL2OutputOracle contract. If you are upgrading with an EOA ADMIN key, you will execute the upgrade call with the ADMIN key. If you do not have the ADMIN key, the call below will output the raw calldata for the upgrade call, which can be executed by the "owner" in a separate context.

Upgrading with an EOA ADMIN key

To update the L2OutputOracle implementation with an EOA ADMIN key, run the following command in /contracts.

just upgrade-oracle

Upgrading with a non-EOA ADMIN key

If the owner of the L2OutputOracle is not an EOA (e.g. multisig, contract), set EXECUTE_UPGRADE_CALL to false in your .env file. This will output the raw calldata for the upgrade call, which can be executed by the owner in a separate context.

| Parameter | Description |

|---|---|

EXECUTE_UPGRADE_CALL | Set to false to output the raw calldata for the upgrade call. |

Then, run the following command in /contracts.

just upgrade-oracle

% just upgrade-oracle

warning: op-succinct-scripts@0.1.0: fault-proof built with release-client-lto profile

warning: op-succinct-scripts@0.1.0: range built with release-client-lto profile

warning: op-succinct-scripts@0.1.0: native_host_runner built with release profile

Finished `release` profile [optimized] target(s) in 0.35s

Running `target/release/fetch-rollup-config --env-file .env`

[⠊] Compiling...

Script ran successfully.

== Logs ==

The calldata for upgrading the contract with the new initialization parameters is:

0x4f1ef2860000000000000000000000007f5d6a5b55ee82090aedc859b40808103b30e46900000000000000000000000000000000000000000000000000000000000000400000000000000000000000000000000000000000000000000000000000000184c0e8e2a100000000000000000000000000000000000000000000000000000000000004b00000000000000000000000000000000000000000000000000000000000000002000000000000000000000000000000000000000000000000000000000135161100000000000000000000000000000000000000000000000000000000674107ce000000000000000000000000ded0000e32f8f40414d3ab3a830f735a3553e18e000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000001346d7ea10cc78c48e3da6f1890bb16cf27e962202f0f9b2c5c57f3cfb0c559ec1dca031e9fc246ec47246109ebb324a357302110de5d447af13a07f527620000000000000000000000004cb20fa9e6fdfe8fdb6ce0942c5f40d49c8986469ad6f24abc0df5e4cab37c1efae21643938ed5393389ce9e747524a59546a8785e32d5f9f902c6a46cb75cbdb083ea67b9475d7026542a009dc9d99072f4bdf100000000000000000000000000000000000000000000000000000000

## Setting up 1 EVM.

OptimismPortal2 Support

Overview

This section demonstrates how OP Succinct can be used with OptimismPortal2 by conforming to Optimism's IDisputeGame.

The OPSuccinctDisputeGame contract is a thin wrapper around OPSuccinctL2OutputOracle that implements IDisputeGame.

Set dispute game to OPSuccinctDisputeGame

OptimismPortal2 requires a DisputeGameFactory contract which manages the lifecycle of dispute games, and the current active dispute game.

To use OP Succinct with OptimismPortal2, you must set the canonical dispute game implementation to OPSuccinctDisputeGame.

No Existing DisputeGameFactory (Testing)

If you don't have a DisputeGameFactory contract or OptimismPortal2 setup and want to test OP Succinct with the DisputeGameFactory contract, follow these steps:

After deploying the OPSuccinctL2OutputOracle contract, set the following environment variables in your .env file:

| Variable | Description | Example |

|---|---|---|

L2OO_ADDRESS | Address of the OPSuccinctL2OutputOracle contract | 0x123... |

PROPOSER_ADDRESSES | Comma-separated list of addresses allowed to propose games. | 0x123...,0x456... |

Run the following command to deploy the OPSuccinctDisputeGame contract:

just deploy-dispute-game-factory

If successful, you should see the following output:

[⠊] Compiling...

[⠊] Compiling 1 files with Solc 0.8.15

[⠒] Solc 0.8.15 finished in 1.93s

Compiler run successful!

Script ran successfully.

== Return ==

0: address 0x6B3342821680031732Bc7d4E88A6528478aF9E38

In these deployment logs, 0x6B3342821680031732Bc7d4E88A6528478aF9E38 is the address of the proxy for the DisputeGameFactory contract.

Existing DisputeGameFactory

If you already have a DisputeGameFactory contract, you must call the setImplementation function to set the canonical dispute game implementation to OPSuccinctDisputeGame. You must additionally set the disputeGameFactory variable in the OPSuccinctL2OutputOracle contract to the address of the DisputeGameFactory contract.

// The game type for the OP_SUCCINCT proof system.

GameType gameType = GameType.wrap(uint32(6));

// Set the canonical dispute game implementation.

gameFactory.setImplementation(gameType, IDisputeGame(address(game)));

// Set the dispute game factory in the OPSuccinctL2OutputOracle contract.

opsuccinctL2OutputOracle.setDisputeGameFactory(gameFactory);

Use OP Succinct with OptimismPortal2

Once you have a DisputeGameFactory contract, you can use OP Succinct with OptimismPortal2 by setting the DGF_ADDRESS environment variable with the address of the DisputeGameFactory contract in your .env file.

With this environment variable set, the proposer will create, initialize and finalize a new OPSuccinctDisputeGame contract on the DisputeGameFactory contract with every aggregation proof.

Note: The proposer will create dispute games by calling the dgfProposeL2Output function on the OPSuccinctL2OutputOracle contract, which calls create on the underlying dispute game factory contract. This is a security measure to prevent proposers from creating dispute games directly on the L2OutputOracle contract when using the OptimismPortal2 interface.

Toggle Optimistic Mode

Optimistic mode is a feature that allows the L2OutputOracle to accept outputs without verification (mirroring the permissioned L2OutputOracle contract). This is useful for testing and development purposes, and as a fallback for OPSuccinctL2OutputOracle in the event of an outage.

When optimistic mode is enabled, the OPSuccinctL2OutputOracle's proposeL2Output function will match the interface of the original L2OutputOracle contract, with the modification that the proposer address must be in the approvedProposers mapping, or permissionless proposing must be enabled.

Enable Optimistic Mode

To enable optimistic mode, call the enableOptimisticMode function on the OPSuccinctL2OutputOracle contract.

function enableOptimisticMode(uint256 _finalizationPeriodSeconds) external onlyOwner whenNotOptimistic {

finalizationPeriodSeconds = _finalizationPeriodSeconds;

optimisticMode = true;

emit OptimisticModeToggled(true, _finalizationPeriodSeconds);

}

Ensure that the finalizationPeriodSeconds is set to a value that is appropriate for your use case. The standard setting is 1 week (604800 seconds).

The finalizationPeriodSeconds should never be 0.

Disable Optimistic Mode

By default, optimistic mode is disabled. To switch back to validity mode, call the disableOptimisticMode function on the OPSuccinctL2OutputOracle contract.

function disableOptimisticMode(uint256 _finalizationPeriodSeconds) external onlyOwner whenOptimistic {

finalizationPeriodSeconds = _finalizationPeriodSeconds;

optimisticMode = false;

emit OptimisticModeToggled(false, _finalizationPeriodSeconds);

}

Set the finalizationPeriodSeconds to a value that is appropriate for your use case. An example configuration is 1 hour (3600 seconds).

The finalizationPeriodSeconds should never be 0.

Validity Proposer

In validity mode, OP Succinct will generate validity proofs for all OP Stack transactions.

The following guide will walk you through the steps of configuring the OP Succinct proposer service in validity mode for your chain. Proceed with this section after you have deployed the OPSuccinctL2OutputOracle contract or the OPSuccinctDisputeGame contract.

Proposer

The op-succinct service monitors the state of the L2 chain, requests proofs from the Succinct Prover Network and submits them to the L1.

RPC Requirements

Confirm that your RPC's have all of the required endpoints as specified in the prerequisites section.

Hardware Requirements

We recommend the following hardware configuration for the default op-succinct validity service (1 concurrent proof request & 1 concurrent witness generation thread):

Using the docker compose file:

- Full

op-succinctservice: 2 vCPUs, 4GB RAM. - Mock

op-succinctservice: 2 vCPUs, 8GB RAM. Increased memory because the machine is executing the proofs locally.

Depending on the number of concurrent requests you want to run, you may need to increase the number of vCPUs and memory allocated to the op-succinct container.

Environment Setup

Make sure to include all of the required environment variables in the .env file.

Before starting the proposer, ensure you have deployed the relevant contracts and have the address of the proxy contract ready. Follow the steps in the Environment Variables section.

Required Environment Variables

| Parameter | Description |

|---|---|

L1_RPC | L1 Archive Node. |

L2_RPC | L2 Execution Node (op-geth). |

L2_NODE_RPC | L2 Rollup Node (op-node). |

NETWORK_PRIVATE_KEY | Private key for the Succinct Prover Network. See the Succinct Prover Network Quickstart for setup instructions. |

L2OO_ADDRESS | Address of the OPSuccinctL2OutputOracle contract. |

PRIVATE_KEY | Private key for the account that will be posting output roots to L1. |

Optional Environment Variables

| Parameter | Description |

|---|---|

L1_BEACON_RPC | L1 Consensus (Beacon) Node. Could be required for integrations that access consensus-layer data. |

NETWORK_RPC_URL | Default: https://rpc.production.succinct.xyz. RPC URL for the Succinct Prover Network. |

DATABASE_URL | Default: postgres://op-succinct@postgres:5432/op-succinct. The address of a Postgres database for storing the intermediate proposer state. |

L1_CONFIG_DIR | Default: <project-root>/configs/L1. The directory containing the L1 chain configuration files. |

L2_CONFIG_DIR | Default: <project-root>/configs/L2. Directory containing L2 chain configuration files. |

DGF_ADDRESS | Address of the DisputeGameFactory contract. Note: If set, the proposer will create a dispute game with the DisputeGameFactory, rather than the OPSuccinctL2OutputOracle. Compatible with OptimismPortal2. |

RANGE_PROOF_STRATEGY | Default: reserved. Set to hosted to use hosted proof strategy. |

AGG_PROOF_STRATEGY | Default: reserved. Set to hosted to use hosted proof strategy. |

AGG_PROOF_MODE | Default: plonk. Set to groth16 to use Groth16 proof type. Note: Changing the proof mode requires updating the verifier gateway contract address in your L2OutputOracle contract deployment. See SP1 Contract Addresses for verifier addresses. |

SUBMISSION_INTERVAL | Default: 1800. The number of L2 blocks that must be proven before a proof is submitted to the L1. Note: The interval used by the validity service is always >= to the submissionInterval configured on the L2OO contract. To allow for the validity service to configure this parameter entirely, set the submissionInterval in the contract to 1. |

RANGE_PROOF_INTERVAL | Default: 1800. The number of blocks to include in each range proof. For chains with high throughput, you need to decrease this value. |

RANGE_PROOF_EVM_GAS_LIMIT | Default: 0. The total amount of ethereum gas allowed to be in each range proof. If 0, uses the RANGE_PROOF_INTERVAL instead to do a fixed number of blocks interval. NOTE: if both RANGE_PROOF_INTERVAL and RANGE_PROOF_EVM_GAS_LIMIT are set, the number of blocks to include in each range proof is determined either when the cumulative gas reaches RANGE_PROOF_EVM_GAS_LIMIT or the number of blocks reaches RANGE_PROOF_INTERVAL, whichever occurs first. |

MAX_CONCURRENT_PROOF_REQUESTS | Default: 1. The maximum number of concurrent proof requests (in mock and real mode). |

MAX_CONCURRENT_WITNESS_GEN | Default: 1. The maximum number of concurrent witness generation requests. |

OP_SUCCINCT_MOCK | Default: false. Set to true to run in mock proof mode. The OPSuccinctL2OutputOracle contract must be configured to use an SP1MockVerifier. |

METRICS_PORT | Default: 8080. The port to run the metrics server on. |

LOOP_INTERVAL | Default: 60. The interval (in seconds) between each iteration of the OP Succinct service. |

SIGNER_URL | URL for the Web3Signer. Note: This takes precedence over the PRIVATE_KEY environment variable. |

SIGNER_ADDRESS | Address of the account that will be posting output roots to L1. Note: Only set this if the signer is a Web3Signer. Note: Required if SIGNER_URL is set. |

SAFE_DB_FALLBACK | Default: false. Whether to fallback to timestamp-based L1 head estimation even though SafeDB is not activated for op-node. When false, proposer will panic if SafeDB is not available. It is by default false since using the fallback mechanism will result in higher proving cost. |

OP_SUCCINCT_CONFIG_NAME | Default: "opsuccinct_genesis". The name of the configuration the proposer will interact with on chain. |

OTLP_ENABLED | Default: false. Whether to export logs to OTLP. |

LOGGER_NAME | Default: op-succinct. This will be the service.name exported in the OTLP logs. |

OTLP_ENDPOINT | Default: http://localhost:4317. The endpoint to forward OTLP logs to. |

USE_KMS_REQUESTER | Default: ``. Whether to expect NETWORK_PRIVATE_KEY to be an AWS KMS key ARN instead of a plaintext private key. |

MAX_PRICE_PER_PGU | Default: 300,000,000. The maximum price per pgu for proving. |

PROVING_TIMEOUT | Default: 14400 (4 hours). The timeout to use for proving (in seconds). |

NETWORK_CALLS_TIMEOUT | Default: 15 (15 seconds). The timeout for network prover calls (in seconds). |

RANGE_CYCLE_LIMIT | Default: 1,000,000,000,000. The cycle limit to use for range proofs. |

RANGE_GAS_LIMIT | Default: 1,000,000,000,000. The gas limit to use for range proofs. |

AGG_CYCLE_LIMIT | Default: 1,000,000,000,000. The cycle limit to use for aggregation proofs. |

AGG_GAS_LIMIT | Default: 1,000,000,000,000. The gas limit to use for aggregation proofs. |

WHITELIST | Default: ``. The list of prover addresses that are allowed to bid on proof requests. |

MIN_AUCTION_PERIOD | Default: 1. The minimum auction period (in seconds). |

AUCTION_TIMEOUT | Default: 60 (1 minute). How long to wait before canceling a proof request that hasn't been assigned (in seconds). |

Build the Proposer Service

Build the OP Succinct validity service.

docker compose build

Run the Proposer

Run the OP Succinct validity service.

docker compose up

To see the logs of the OP Succinct services, run:

docker compose logs -f

After several minutes, the validity service will start to generate range proofs. Once enough range proofs have been generated, an aggregation proof will be created. Once the aggregation proof is complete, it will be submitted to the L1.

To stop the OP Succinct validity service, run:

docker compose stop

Proposer Lifecycle

The proposer service monitors the state of the L2 chain, requests proofs and submits them to the L1. Proofs are submitted to the Succinct Prover Network. Here's how the proposer service decides when to request range and aggregation proofs.

Range Proof Lifecycle

stateDiagram-v2

[*] --> Unrequested: New Request for Range of Blocks

Unrequested --> WitnessGeneration: make_proof_request

WitnessGeneration --> Execution: mock=true

WitnessGeneration --> Prove: mock=false

Execution --> Complete: generate_mock_range_proof

Execution --> Failed: error

Prove --> Complete: proof fulfilled

Prove --> Failed: proof unfulfillable/error

Failed --> Split: range AND (2+ failures OR unexecutable)

Split --> Unrequested: two new smaller ranges

Failed --> Unrequested: retry same range

Aggregation Proof Lifecycle

stateDiagram-v2

[*] --> Unrequested: Total interval of completed range proofs > submissionInterval.

Unrequested --> WitnessGeneration: make_proof_request

WitnessGeneration --> Execution: mock=true

WitnessGeneration --> Prove: mock=false

Execution --> Complete: generate_mock_agg_proof

Execution --> Failed: error

Prove --> Complete: proof fulfilled

Prove --> Failed: proof unfulfillable/error

Failed --> Unrequested: retry same range

Complete --> Relayed: submit_agg_proofs

Proposer Operations

The proposer performs the following operations each loop:

- Validates that the requester config matches the contract configuration

- Logs proposer metrics like number of requests in each state

- Handles ongoing tasks by checking completed/failed tasks and cleaning them up

- Sets orphaned tasks (in WitnessGeneration/Execution but not in tasks map) to Failed status

- Gets proof statuses for all requests in proving state from the Prover Network

- Adds new range requests to cover gaps between latest proposed and finalized blocks. If a request failed, this is where the request is re-tried.

- Creates aggregation proofs from completed contiguous range proofs.

- Requests proofs for any unrequested proofs from the prover network/generates mock proofs.

- Submits any completed aggregation proofs to the L2 output oracle contract.

Upgrading OP Succinct

Each new release of op-succinct will specify if it includes:

- New verification keys

- Contract changes

- New

op-succinctbinary version

Based on what's included:

- Contract changes → Upgrade the relevant contracts

- New verification keys → Update

aggregationVkey,rangeVkeyCommitmentandrollupConfigHashparameters - New binary → Upgrade Docker images

Upgrade Contract

- Check out the latest release of

op-succinctfrom here. - Follow the instructions here to upgrade the relevant contracts.

Update Contract Parameters

If you just need to update the aggregationVkey, rangeVkeyCommitment or rollupConfigHash parameters and not upgrade the contract itself, follow these steps:

- Check out the latest release of

op-succinctfrom here. - Follow the instructions here to update the parameters of the relevant contracts.

Upgrade Docker Images

If you're using Docker, you can use the images associated with the latest release of OP Succinct from GitHub Container Registry (GHCR) here: https://github.com/succinctlabs/op-succinct/pkgs/container/op-succinct%2Fop-succinct.

For example, to use version v2.0.0, you can use ghcr.io/succinctlabs/op-succinct:v2.0.0.

Experimental Features

This section covers experimental features for OP Succinct (Validity).

Celestia DA

The op-succinct-celestia service monitors the state of an OP Stack chain with Celestia DA enabled, requests proofs from the Succinct Prover Network and submits them to the L1.

For detailed setup instructions, see the Celestia DA section.

EigenDA DA

The op-succinct-eigenda service monitors the state of an OP Stack chain with EigenDA enabled, uses an EigenDA Proxy to retrieve and validate blobs from DA certificates, requests proofs from the Succinct Prover Network and submits them to L1.

For detailed setup instructions, see the EigenDA DA section.

Celestia Data Availability

This section describes the requirements to use OP Succinct for a chain with Celestia DA. The requirements are additive to the ones required for the op-succinct service. Please refer to the Proposer section for the base configuration.

Environment Setup

To use Celestia DA, you need additional environment variables.

Important: When using Docker Compose, these variables must be in a .env file in the same directory as your docker-compose-celestia.yml file. Docker Compose needs these variables in .env for variable substitution in the compose file itself.

| Parameter | Description |

|---|---|

CELESTIA_CONNECTION | URL of the Celestia light node RPC endpoint. For setup instructions, see Celestia's documentation on light node. |

NAMESPACE | Namespace ID for the Celestia DA. A namespace is a unique identifier that allows applications to have their own data availability space within Celestia's data availability layer. For more details, see Celestia's documentation on Namespaced Merkle Trees (NMTs). |

CELESTIA_INDEXER_RPC | URL of the op-celestia-indexer RPC endpoint. This is required for querying the location of L2 blocks in Celestia or Ethereum DA. |

START_L1_BLOCK | Starting L1 block number for the indexer to begin syncing from. Note: This should be early enough to cover derivation of the starting L2 block from the contract. |

BATCH_INBOX_ADDRESS | Address of the batch inbox contract on L1. |

Additionally, include all the base configuration variables from the Proposer section in the same .env file.

op-celestia-indexer

The op-celestia-indexer is a required component that indexes L2 block locations on both Celestia DA and Ethereum DA. It is automatically included in the docker-compose-celestia.yml configuration and will start before the OP Succinct proposer service.

The indexer runs on port 57220 and uses the environment variables from your .env file. When using docker-compose, the CELESTIA_INDEXER_RPC is automatically set to http://op-celestia-indexer:57220 for inter-container communication.

For more details about op-celestia-indexer, see the official documentation.

Running the indexer separately (optional)

If you need to run the indexer separately without docker-compose:

docker pull opcelestia/op-celestia-indexer:op-node-v1.13.6-rc.1

# Create a directory for the indexer database

mkdir -p ~/indexer-data

docker run -d \

--name op-celestia-indexer \

-p 57220:57220 \

-v ~/indexer-data:/data \

opcelestia/op-celestia-indexer:op-node-v1.13.6-rc.1 \

op-celestia-indexer \

--start-l1-block <START_L1_BLOCK> \

--batch-inbox-address <BATCH_INBOX_ADDRESS> \

--l1-eth-rpc <L1_RPC> \

--l2-eth-rpc <L2_RPC> \

--op-node-rpc <L2_NODE_RPC> \

--rpc.enable-admin \

--rpc.addr 0.0.0.0 \

--rpc.port 57220 \

--da.rpc <CELESTIA_CONNECTION> \

--da.namespace <NAMESPACE> \

--da.auth_token <CELESTIA_AUTH_TOKEN> \

--db-path /data/indexer.db

When running separately, set CELESTIA_INDEXER_RPC=http://localhost:57220 in your .env file.

Run the Celestia Proposer Service

Run the op-succinct-celestia service.

docker compose -f docker-compose-celestia.yml up -d

To see the logs of the op-succinct-celestia service, run:

docker compose -f docker-compose-celestia.yml logs -f

To stop the op-succinct-celestia service, run:

docker compose -f docker-compose-celestia.yml down

Celestia Contract Configuration

Before deploying or updating contracts, generate the CelestiaDA-specific range verification key, aggregation verification key, and rollup config hash with the correct feature flag. This ensures the range verification key commitment matches the Celestia range ELF:

# From the repository root

cargo run --bin config --release --features celestia -- --env-file .env

The command prints the Range Verification Key Hash, Aggregation Verification Key Hash, and Rollup Config Hash; keep these values and ensure they match what you publish on-chain in OPSuccinctL2OutputOracle.

When you use the just helpers below, pass the celestia feature so fetch-l2oo-config runs with the correct ELFs. If you call the binaries manually (fetch-l2oo-config, config, etc.), append --features celestia; otherwise the script emits the default Ethereum DA values and your contracts will revert with ProofInvalid() when submitting proofs.

Deploying OPSuccinctL2OutputOracle with Celestia features

just deploy-oracle .env celestia

Updating OPSuccinctL2OutputOracle Parameters

just update-parameters .env celestia

For more details on the just update-parameters command, see the Updating OPSuccinctL2OutputOracle Parameters section.

EigenDA Data Availability

This section describes the requirements to use OP Succinct for a chain with EigenDA as the data availability layer. The requirements are additive to the ones required for the op-succinct service. Please refer to the Proposer section for the base configuration.

Environment Setup

Create a .env file with all base configuration variables from the Proposer section, plus the EigenDA-specific variable below.

Required Variables

| Parameter | Description |

|---|---|

EIGENDA_PROXY_ADDRESS | Base URL of the EigenDA Proxy REST service (e.g., http://localhost:3100). OP Succinct connects to this proxy to retrieve and validate EigenDA blobs from DA certificates. |

EigenDA Proxy

The EigenDA Proxy is a REST server that wraps EigenDA client functionality and conforms to the OP Alt-DA server spec. It provides:

- POST routes: Disperse payloads into EigenDA and return a DA certificate.

- GET routes: Retrieve payloads via a DA certificate; performs KZG and certificate verification.

See EigenDA Proxy for more details on how to run the proxy.

After running the proxy, set EIGENDA_PROXY_ADDRESS=http://127.0.0.1:3100 in your .env for OP Succinct to consume the proxy.

EigenDA Contract Configuration

Before deploying or updating contracts, generate the EigenDA-specific verification key commitments and rollup config hash with the correct feature flag. This ensures the range verification key commitment matches the EigenDA range ELF:

# From the repository root

cargo run --bin config --release --features eigenda -- --env-file .env

The command prints the Range Verification Key Hash, Aggregation Verification Key Hash, and Rollup Config Hash; keep these values and ensure they match what you publish on-chain in OPSuccinctL2OutputOracle.

Whenever you rely on just helpers (deploy-oracle, update-parameters, etc.), include the eigenda argument so fetch-l2oo-config runs with the EigenDA feature enabled. If you invoke the Rust binaries directly, add --features eigenda; otherwise the script emits the default Ethereum DA values and your contracts will revert with ProofInvalid() when submitting proofs.

Run the EigenDA Proposer Service

Run the op-succinct-eigenda service.

docker compose -f docker-compose-eigenda.yml up -d

To see the logs of the op-succinct-eigenda service, run:

docker compose -f docker-compose-eigenda.yml logs -f

To stop the op-succinct-eigenda service, run:

docker compose -f docker-compose-eigenda.yml down

Deploying OPSuccinctL2OutputOracle with EigenDA features

just deploy-oracle .env eigenda

Updating OPSuccinctL2OutputOracle Parameters

just update-parameters .env eigenda

For more details on updating parameters, see the Updating OPSuccinctL2OutputOracle Parameters section.

OP Succinct Lite (Fault Proofs)

Architecture

Overview

OP Succinct Lite offers a powerful, configurable fault proof system that enables a simplified on-chain dispute mechanism, reduced time to finality, configurable fast finality and support for Alt-DA.

- The same OP Succinct program is used for both validity and fault proof modes.

OPSuccinctFaultDisputeGamecontract integrated with OP Stack's DisputeGameFactory.- Single-round dispute resolution with ZK proofs.

We assume that the reader has a solid understanding of the OP Stack's DisputeGameFactory and IDisputeGame interface. Documentation can be found here. We implement the IDisputeGame interface with a ZK-enabled fault proof (using the OP Succinct ZK program) instead of the standard interactive bisection game that the vanilla OP Stack uses.

Core Concepts

- Proposals: Each proposal represents a claimed state transition from a start L2 block to an end L2 block with a

startL2OutputRootand aclaimedL2OutputRootwhere the output root is a commitment to the entirety of L2 state. - Challenges: Participants can challenge proposals they believe are invalid.

- Proofs: ZK proofs that verify the correctness of state transitions contained in proposals, anchored against an L1 block hash.

- Resolution: Process of determining whether a proposal is valid or not.

Dispute Game Implementation

Proposing new state roots goes through the regular flow of the DisputeGameFactory to the OPSuccinctFaultDisputeGame contract that implements the IDisputeGame interface. Each proposal contains a link to a previous parent proposal (unless it is the first proposal after initialization, in which case it stores the parent index as uint32.max), and includes a l2BlockNumber and claimed l2OutputRoot.

Once a proposal is published and a OPSuccinctFaultDisputeGame created, the dispute game can be in one of several states:

- Unchallenged: The initial state of a new proposal.

- Challenged: A proposal that has been challenged but not yet proven.

- UnchallengedAndValidProofProvided: A proposal that has been proven valid with a verified proof.

- ChallengedAndValidProofProvided: A challenged proposal that has been proven valid with a verified proof.

- Resolved: The final state after resolution, either

GameStatus.CHALLENGER_WINSorGameStatus.DEFENDER_WINS.

Note that "challenging" a proposal does not require a proof--as we want challenges to be able to be submitted quickly, without waiting for proof generation delay. Once a challenge is submitted, then the proposal's "timeout" is set to MAX_PROVE_DURATION parameter that allows for an extended amount of time to generate a proof to prove that the original proposal is correct. If a proof of validity is not submitted by the deadline, then the proposal is assumed to be invalid and the challenger wins. If a valid proof is submitted by the deadline, then the original proposer wins the dispute. Note that if a parent game is resolved in favor of a challenger winning, then any child game will also be considered invalid.

Illustrative Example

graph TB

A[Genesis State

Block: 0

Root: 0x123] --> |Parent| B[Proposal 1

Block: 1000

Root: 0xabc

Game Status: DEFENDER_WINS]

B --> |Parent| C[Proposal 2A

Block: 2000

Root: 0xdef

Game Status: CHALLENGER_WINS]

B --> |Parent| D[Proposal 2B

Block: 2000

Root: 0xff1

Game Status: Unchallenged]

C --> |Parent| E[Proposal 3A

Block: 3000

Root: 0xbee

Game Status: Unchallenged]

D --> |Parent| F[Proposal 3B

Block: 3000

Root: 0xfab

Game Status: ChallengedAndValidProofProvided]

classDef genesis fill:#d4edda,stroke:#155724

classDef defender_wins fill:#28a745,stroke:#1e7e34,color:#fff

classDef challenger_wins fill:#dc3545,stroke:#bd2130,color:#fff

classDef unchallenged fill:#e2e3e5,stroke:#383d41

classDef proven fill:#17a2b8,stroke:#138496,color:#fff

class A genesis

class B defender_wins

class C challenger_wins

class D,E unchallenged

class F proven

In this example, Proposal 3A would always resolve to CHALLENGER_WINS, as its parent 2A has CHALLENGER_WINS. Proposal 3B would resolve to DEFENDER_WINS if and only if its parent 2B successfully is unchallenged past its deadline and the final status is DEFENDER_WINS.

Contract Description

Immutable Variables

Immutable variables are configuration values (typically read from environment

variables e.g. a .env file) set once at contract deployment and cannot be

changed afterward. They are embedded in the contract code, ensuring these

parameters remain fixed for the lifetime of the dispute game.

| Variable | Description |

|---|---|

MAX_CHALLENGE_DURATION | Time window during which a proposal can be challenged. |

MAX_PROVE_DURATION | Time allowed for proving a challenge. |

GAME_TYPE | The type of the game, which is set in the DisputeGameFactory contract. |

DISPUTE_GAME_FACTORY | The factory contract that creates this game. |

SP1_VERIFIER | The verifier contract that verifies the SP1 proof. |

ROLLUP_CONFIG_HASH | Hash of the chain's rollup configuration |

AGGREGATION_VKEY | The verification key for the aggregation SP1 program. |

RANGE_VKEY_COMMITMENT | The commitment to the BabyBear representation of the verification key of the range SP1 program. |

CHALLENGER_BOND | Amount of ETH required to submit a challenge. If a prover supplies a valid proof, the bond is disbursed to the prover. |

ANCHOR_STATE_REGISTRY | The anchor state registry contract. |

ACCESS_MANAGER | The access manager contract. |

Types

ProposalStatus

While GameStatus (IN_PROGRESS, DEFENDER_WINS, CHALLENGER_WINS) represents the final outcome of the game, we need ProposalStatus to:

- allow proving for fast finality even if the proposal is unchallenged.

- allow anyone to prove a proposal even the prover is not the proposer.

Represents the current state of a proposal in the dispute game:

enum ProposalStatus {

Unchallenged, // Initial state: New proposal without challenge

Challenged, // Challenged by someone, awaiting proof

UnchallengedAndValidProofProvided, // Valid proof provided without any challenge

ChallengedAndValidProofProvided, // Valid proof provided after being challenged

Resolved // Final state after game resolution

}

Proposal Status Transitions:

Unchallenged→Challenged(viachallenge())UnchallengedAndValidProofProvided(viaprove())Resolved(viaresolve())

Challenged→ChallengedAndValidProofProvided(viaprove())Resolved(viaresolve())

UnchallengedAndValidProofProvided→Resolved(viaresolve())ChallengedAndValidProofProvided→Resolved(viaresolve())

ClaimData

Core data structure holding the state of a proposal:

struct ClaimData {

uint32 parentIndex; // Reference to parent game (uint32.max for first game)

address counteredBy; // Address of challenger (address(0) if unchallenged)

address prover; // Address that provided valid proof (address(0) if unproven)

Claim claim; // The claimed L2 output root

ProposalStatus status; // Current status of the proposal

Timestamp deadline; // Time by which proof must be provided

}

Key differences from Optimism's implementation:

parentIndexis initialized oninitialize().- Simplified to single claim instead of array of claims.

- Removed

claimantandpositionfields since there is no bisection. - Removed

bondfield since bonds are stored in the game contract. - Added

proverfield to enable anyone to prove a proposal even the prover is not the proposer. - Added proposal status.

- Uses deadline instead of clock with period getters.

Key Functions

Initialization

function initialize() external payable virtual

Initializes the dispute game with:

-

Initial state of the game

-

startingOutputRoot: Starting point for verification- For first game: Anchor state root for the game type

- For subsequent games: Parent game's root claim and block number

-

claimData: Core game stateparentIndex: Reference to the parent game (uint32.maxfor first game)counteredBy: Initiallyaddress(0)prover: Initiallyaddress(0)claim: Proposer's claimed output rootstatus: Set toProposalStatus.Unchallengeddeadline: Set toblock.timestamp + MAX_CHALLENGE_DURATION

-

-

Validation Rules

-

Parent Game (if not first game)

- Must not have been blacklisted

- Must have been respected game type when created

- Must not have been won by challenger

-

Output Root

- Must be after starting block number

- First game starts from the anchor state root for the game type

- Later games start from parent's output root

-

-

Bond Management

- Proposer's bond enforced by factory

- Held in contract until resolution

Initialization will revert if:

- Already initialized

- Invalid parent game

- Root claim at/before starting block

- Incorrect calldata size for

extraData - Proposer is not whitelisted

Challenge

function challenge() external payable returns (ProposalStatus)

Allows participants to challenge a proposal by:

- Depositing the challenger bond (the proof reward)

- Setting the proposal deadline to be

+ provingTimeover the current timestamp - Updating proposal state to

ProposalStatus.Challenged

Attempting to challenge a game will revert if:

- Game is over (past deadline or already proven)

- Challenger is not whitelisted

- Incorrect bond amount provided

Proving

function prove(bytes calldata proofBytes) external returns (ProposalStatus)

Validates a proposal with a proof:

-

Timing Requirements

- Must be submitted before the game is over (deadline passed or already proven)

-

Proof Verification

- Uses SP1 verifier to validate the aggregation proof against public inputs:

- L1 head hash (from game creation)

- Starting output root (from parent game or anchor state root)

- Claimed output root

- Claimed L2 block number

- Rollup configuration hash

- Range verification key commitment

- Uses aggregation verification key to verify the proof

- Uses SP1 verifier to validate the aggregation proof against public inputs:

-

State Updates

- Records the prover's address in

claimData.prover - Updates proposal status if the proof is valid:

ProposalStatus.UnchallengedAndValidProofProvidedif there was no challengeProposalStatus.ChallengedAndValidProofProvidedif there was a challenge

- Records the prover's address in

-

Rewards

- No immediate reward distribution

- Proof reward is distributed in

claimCredit():- If challenged: prover receives the challenger's bond

- If unchallenged: no reward but can have fast finality

Attempting to submit a proof will revert if:

- Proof is not submitted before the proof deadline

- Proof is not valid

- If a prover tries to prove a game that has already been proven

Resolution

function resolve() external returns (GameStatus)

Resolves the game by:

-

Checking parent game status:

- Must be resolved

- Must be respected

- Must not be blacklisted

- Must not be retired

- If parent game is

CHALLENGER_WINS, current game automatically resolves toCHALLENGER_WINS

-

For other cases:

- Game must be over (past deadline or proven)

- Resolution depends on final state:

- Unchallenged:

DEFENDER_WINS, proposer gets its bond back - Challenged:

CHALLENGER_WINS, challenger gets everything - UnchallengedAndValidProofProvided:

DEFENDER_WINS, proposer gets its bond back - ChallengedAndValidProofProvided:

DEFENDER_WINS, prover gets challenger bond, proposer gets its bond back

- Unchallenged:

Attempting to resolve will revert if:

- Parent game is not yet resolved

- Game is already resolved (i.e, not

IN_PROGRESS) - Challenge/proof window has not expired

Reward Distribution

function claimCredit(address _recipient) external

Claims rewards by:

- Closing the game and determining bond distribution mode if not already set

- Checking recipient's credit balance based on distribution mode:

NORMAL: UsesnormalModeCreditfor standard game outcomesREFUND: UsesrefundModeCreditwhen game is improper

- Setting recipient's credit balance to 0

- Transferring ETH to recipient

Attempting to claim will revert if:

- Game is not resolved

- Game is not finalized according to AnchorStateRegistry

- Recipient has no credit to claim

- ETH transfer fails

- Invalid bond distribution mode

Bond distribution modes:

NORMAL: Standard outcome-based distribution- Defender wins unchallenged: Proposer gets full bond

- Defender wins challenged: Prover gets reward, proposer gets remainder

- Challenger wins: Challenger gets full bond

REFUND: Returns bonds to original depositors when game is improper

Security Model

Bond System

The contract implements a bond system to incentivize honest behavior:

- Proposal Bond: Required to submit a proposal.

- Challenger Bond (Proof Reward): Required to challenge a proposal, which is paid to successful provers.

Time Windows

Two key time windows ensure fair participation:

- Challenge Window: Period during which proposals can be challenged.

- Proving Window: Time allowed for submitting proofs after a challenge.

Parent-Child Relationships

- Each game (except genesis) has a parent

- Invalid parent automatically invalidates children

- Child games can only be resolved if their parent game is resolved

Acknowledgements

Special thanks to Kelvin Fichter for his invaluable contributions with thorough design reviews, technical guidance, and insightful feedback throughout the development process.

Zach Obront, who worked on the first version of OP Succinct, prototyped a similar MultiProof dispute game implementation with OP Succinct as part of his work with the Ithaca team. This fault proof implementation takes some inspiration from his multiproof work.

Quick Start Guide: OP Succinct Fault Dispute Game

This guide provides the fastest path to try out OP Succinct fault dispute games by deploying contracts and running a proposer to create games.

If your integration involves alternative data availability solutions like

Celestia or EigenDA, you may need to configure additional environment variables.

Refer to the Experimental Features section

for the required setup steps.

Prerequisites

- Foundry

- Rust (latest stable version)

- just

- L1 and L2 archive node RPC endpoints

- L2 node should be configured with SafeDB enabled.

- See SafeDB Configuration for more details.

- ETH on L1 for:

- Contract deployment

- Game bonds (configurable in factory)

- Challenge bonds (proof rewards)

- Transaction fees

On Ubuntu, you'll need some system dependencies to run the service:

sudo apt update && sudo apt install -y \

curl clang pkg-config libssl-dev ca-certificates git libclang-dev jq build-essential

Step 1: Clone and build contracts

Clone the repository and build the contracts:

git clone https://github.com/succinctlabs/op-succinct.git

cd op-succinct/contracts

forge build

cd ..

Step 2: Configure environment

Create a .env file in the project root. See this guide for more details on these environment variables:

# example .env file

L1_RPC=<YOUR_L1_RPC_URL>

L2_RPC=<YOUR_L2_RPC_URL>

L2_NODE_RPC=<YOUR_L2_NODE_RPC_URL>

PRIVATE_KEY=<YOUR_PRIVATE_KEY>

# Required - set these values

GAME_TYPE=42

DISPUTE_GAME_FINALITY_DELAY_SECONDS=604800

MAX_CHALLENGE_DURATION=604800

MAX_PROVE_DURATION=86400

# Optional

# Not needed by default, but could be required for integrations that access consensus-layer data.

L1_BEACON_RPC=<L1_BEACON_RPC_URL>

# Warning: Setting PERMISSIONLESS_MODE=true allows anyone to propose and challenge games. Ensure this behavior is intended for your deployment.

# For a permissioned setup, set this to false and configure PROPOSER_ADDRESSES and CHALLENGER_ADDRESSES.

PERMISSIONLESS_MODE=true

# For testing, use mock verifier

OP_SUCCINCT_MOCK=true

Obtaining a Test Private Key

-

Anvil (local devnet): Run

anviland use one of the pre-funded accounts printed on startup. Copy the Private Key value for any account. Only use these on your local Anvil network. -

Foundry (generate a fresh key): Run

cast wallet newto generate a human-readable output. Save the private key and fund it on your test network.

⚠️ Caution: Never use test keys on mainnet or with real assets.

Step 3: Deploy contracts

-

Deploy an SP1 mock verifier:

just deploy-mock-verifier -

Deploy the core fault dispute game contracts:

just deploy-fdg-contracts

Save the output addresses, particularly ANCHOR_STATE_REGISTRY_ADDRESS as "AnchorStateRegistry: 0x..." and FACTORY_ADDRESS as "Factory Proxy: 0x..."

Step 4: Run the Proposer

-

Create a

.env.proposerfile in the project root directory:# Required Configuration L1_RPC=<YOUR_L1_RPC_URL> L2_RPC=<YOUR_L2_RPC_URL> L2_NODE_RPC=<YOUR_L2_NODE_RPC_URL> ANCHOR_STATE_REGISTRY_ADDRESS=<ANCHOR_STATE_REGISTRY_ADDRESS_FROM_DEPLOYMENT> FACTORY_ADDRESS=<FACTORY_ADDRESS_FROM_DEPLOYMENT> GAME_TYPE=42 PRIVATE_KEY=<YOUR_PRIVATE_KEY> MOCK_MODE=true # Set to true for mock mode -

Run the proposer:

cargo run --bin proposer

(Optional) Run with fast finality mode

-

Configure more environment variables to the

.env.proposerfile in the project root directory:FAST_FINALITY_MODE=true NETWORK_PRIVATE_KEY=0x...For the Succinct Prover Network setup, see the quickstart guide.

-

Run the proposer:

cargo run --bin proposer

Step 5: Run the Challenger

-

Create a

.env.challengerfile in the project root directory:# Required Configuration L1_RPC=<YOUR_L1_RPC_URL> L2_RPC=<YOUR_L2_RPC_URL> ANCHOR_STATE_REGISTRY_ADDRESS=<ANCHOR_STATE_REGISTRY_ADDRESS_FROM_DEPLOYMENT> FACTORY_ADDRESS=<FACTORY_ADDRESS_FROM_DEPLOYMENT> GAME_TYPE=42 PRIVATE_KEY=<YOUR_PRIVATE_KEY> -

Run the challenger:

cargo run --bin challenger

Monitoring

- Games are created every 1800 blocks by default (via

PROPOSAL_INTERVAL_IN_BLOCKSin.env.proposer. See Optional Environment Variables for Proposer) - Track games via block explorer using factory/game addresses and tx hashes from logs

- Both proposer and challenger attempt to resolve eligible games after challenge period

Troubleshooting

Common issues:

- Deployment fails: Check RPC connection and ETH balance

- Proposer won't start: Verify environment variables and addresses

- Games not creating: Check proposer logs for errors and L1,L2 RPC endpoints

For detailed configuration and advanced features, see:

Contract Management

This section will show you how to configure and deploy the on-chain contracts required for OP Succinct in fault-proof mode.

| Contract | Description |

|---|---|

OPSuccinctFaultDisputeGame.sol | Fault proof verification contract implementing IDisputeGame.sol interface for OptimismPortal2.sol. Implements OP Succinct Lite fault proof challenge mechanism. |

AccessManager.sol | Manages access control for the fault proof system. |

Deploying OP Succinct Fault Dispute Game

This guide explains how to deploy the OP Succinct Fault Dispute Game contracts using the DeployOPSuccinctFDG.s.sol script.

Overview

The deployment script performs the following actions:

- Deploys the

DisputeGameFactoryimplementation and proxy. - Deploys the

AnchorStateRegistryimplementation and proxy. - Deploys a mock

OptimismPortal2for testing. - Deploys the

AccessManagerand configures it for permissionless games. - Deploys either a mock SP1 verifier for testing or uses a provided verifier address.

- Deploys the

OPSuccinctFaultDisputeGameimplementation. - Configures the factory with initial bond and game implementation.

Prerequisites

- Foundry installed.

- Access to an Ethereum node (local or network).

- Environment variables properly configured.

| Parameter | Description |

|---|---|

L1_RPC | L1 Archive Node. |

L2_RPC | L2 Execution Node (op-geth). |

L2_NODE_RPC | L2 Rollup Node (op-node). |

PRIVATE_KEY | Private key for the account that will be deploying the contract. |

ETHERSCAN_API_KEY | Etherscan API key used for verifying the contract (optional). |

Contract Configuration

Create a .env file in the project root directory with the following variables:

Required Environment Variables

| Variable | Description | Example |

|---|---|---|

L1_BEACON_RPC | L1 Consensus (Beacon) Node. Could be required for integrations that access consensus-layer data. | |

GAME_TYPE | Unique identifier for the game type (uint32). In almost all cases, to use the OP Succinct Fault Dispute Game, this should be set to 42. | 42 |

DISPUTE_GAME_FINALITY_DELAY_SECONDS | Delay before finalizing dispute games. | 604800 for 7 days |

MAX_CHALLENGE_DURATION | Maximum duration for challenges in seconds. | 604800 for 7 days |

MAX_PROVE_DURATION | Maximum duration for proving in seconds. | 86400 for 1 day |

PROPOSER_ADDRESSES | Comma-separated list of addresses allowed to propose games. Ignored if PERMISSIONLESS_MODE is true. | 0x123...,0x456... |

CHALLENGER_ADDRESSES | Comma-separated list of addresses allowed to challenge games. Ignored if PERMISSIONLESS_MODE is true. | 0x123...,0x456... |

SP1 Verifier Configuration

For testing: Set OP_SUCCINCT_MOCK=true. The deployment script will automatically deploy a new SP1MockVerifier contract — no need to set VERIFIER_ADDRESS.

For production: Leave OP_SUCCINCT_MOCK unset (defaults to false) and optionally set VERIFIER_ADDRESS to a custom verifier. If VERIFIER_ADDRESS is not set, it defaults to Succinct's official Plonk VerifierGateway.

Deployment

Run the following command. This automatically detects configurations based on the contents of the elf directory, environment variables, and the L2.

just deploy-fdg-contracts

Optional Environment Variables

The deployment script deploys the contracts with the following parameters:

| Variable | Description | Example |

|---|---|---|

INITIAL_BOND_WEI | Initial bond for the game. | 1_000_000_000_000_000 (for 0.001 ETH) |

CHALLENGER_BOND_WEI | Challenger bond for the game. | 1_000_000_000_000_000 (for 0.001 ETH) |

OPTIMISM_PORTAL2_ADDRESS | Address of an existing OptimismPortal2 contract. Default: if unset, a fresh MockOptimismPortal2 is deployed. | 0x... |

PERMISSIONLESS_MODE | If set to true, anyone can propose or challenge games. Default: false | true or false |

FALLBACK_TIMEOUT_FP_SECS | Timeout in seconds after which permissionless proposing is allowed if no proposal has been made. | 1209600 (for 2 weeks) |

STARTING_L2_BLOCK_NUMBER | Starting L2 block number in decimal. Default: <Latest L2 Finalized block> - <Number of blocks since the DISPUTE_GAME_FINALITY_SECONDS> | 786000 |

VERIFIER_ADDRESS | Default: Succinct's official Plonk VerifierGateway. Address of the ISP1Verifier contract used to verify proofs. Ignored when OP_SUCCINCT_MOCK=true. | 0x... |

OP_SUCCINCT_MOCK | Default: false. If true, the deployment script automatically deploys a new SP1MockVerifier for testing (faster and cheaper than real proofs). | true or false |

Use cast --to-wei <value> eth to convert the value to wei to avoid mistakes.

These values depend on the L2 chain, and the total value secured. Generally, to prevent frivolous challenges, CHALLENGER_BOND should be set to at least 10x of the proving cost needed to prove a game.

Fallback Timeout Mechanism

The FALLBACK_TIMEOUT_FP_SECS parameter configures a permissionless fallback timeout mechanism for proposal creation:

- Default: If not set, defaults to 2 weeks (1209600 seconds)

- Behavior: After the specified timeout has elapsed since the last proposal, anyone can create a new proposal regardless of the

PROPOSER_ADDRESSESconfiguration - Reset: The timeout is reset every time a valid proposal is created

- Immutable: The timeout value is set during deployment and cannot be changed later

- Scope: Only affects proposer permissions; challenger permissions are unaffected

This mechanism ensures that if approved proposers become inactive, the system can still progress through permissionless participation after a reasonable delay.

Post-Deployment

After deployment, the script will output the addresses of:

- Factory Proxy.

- Game Implementation.

- SP1 Verifier.

- OptimismPortal2.

- Anchor State Registry.

- Access Manager.

Save these addresses for future reference and configuration of other components.

Security Considerations

- The deployer address will be set as the factory owner.

- Initial parameters are set for testing - adjust for production.

- The mock SP1 verifier (

OP_SUCCINCT_MOCK=true) should ONLY be used for testing. - For production deployments:

- Provide a valid

VERIFIER_ADDRESS. - Configure proper

ROLLUP_CONFIG_HASH,AGGREGATION_VKEY, andRANGE_VKEY_COMMITMENT. If you used thejust deploy-fdg-contractsscript, these parameters should have been automatically set correctly usingfetch_fault_dispute_game_config.rs. - Set the

OPTIMISM_PORTAL2_ADDRESSenvironment variable, instead of using the default mock portal. - Review and adjust finality delay and duration parameters.

- Consider access control settings.

- Provide a valid

Troubleshooting

Common issues and solutions:

-

Compilation Errors:

- Ensure Foundry is up to date (run

foundryup). - Run

forge clean && forge build.

- Ensure Foundry is up to date (run

-

Deployment Failures:

- Check RPC connection.

- Verify sufficient ETH balance.

- Confirm environment variables are set correctly.

Next Steps

After deployment:

- Update the proposer configuration with the factory address.

- Configure the challenger with the game parameters.

- Test the deployment with a sample game.

- Monitor initial games for correct behavior.

- For production: Replace mock OptimismPortal2 with the real implementation.

Upgrading the OPSuccinct Fault Dispute Game

This guide explains how to upgrade the OPSuccinct Fault Dispute Game contract.

Overview

The upgrade script performs the following actions:

- Deploys a new implementation of the

OPSuccinctFaultDisputeGamecontract - Sets the new implementation in the

DisputeGameFactoryfor the specified game type

Required Environment Variables

Create a .env.upgrade file in the fault-proof directory with the following variables:

| Variable | Description | Example |

|---|---|---|

L1_RPC | L1 RPC endpoint URL | http://127.0.0.1:32935 |

FACTORY_ADDRESS | Address of the existing DisputeGameFactory | 0x... |

GAME_TYPE | Unique identifier for the game type (uint32) | 42 |

MAX_CHALLENGE_DURATION | Maximum duration for challenges in seconds | 604800 for 7 days |

MAX_PROVE_DURATION | Maximum duration for proving in seconds | 86400 for 1 day |

VERIFIER_ADDRESS | Address of the SP1 verifier | 0x... |

ROLLUP_CONFIG_HASH | Hash of the rollup configuration | 0x... |

AGGREGATION_VKEY | Verification key for aggregation | 0x... |

RANGE_VKEY_COMMITMENT | Commitment to range verification key | 0x... |

ANCHOR_STATE_REGISTRY | Address of the AnchorStateRegistry | 0x... |

ACCESS_MANAGER | Address of the AccessManager | 0x... |

ETHERSCAN_API_KEY | Etherscan API key for verifying the deployed contracts. |

Getting the Rollup Config Hash, Aggregation Verification Key, and Range Verification Key Commitment

First, create a .env file in the root directory with the following variables:

L1_RPC=<L1_RPC_URL>

L2_RPC=<L2_RPC_URL>

L2_NODE_RPC=<L2_NODE_RPC_URL>

You can get the aggregation program verification key, range program verification key commitment, and rollup config hash by running the following command:

cargo run --bin config --release -- --env-file <PATH_TO_ENV_FILE>

If your integration involves alternative DA solutions like Celestia or EigenDA,

ensure you enable the respective feature flag. For example, add --features celestia or --features eigenda to the command for proper configuration.

Optional Environment Variables

| Variable | Description | Default | Example |

|---|---|---|---|

CHALLENGER_BOND_WEI | Challenger bond for the game | 0.001 ether | 1000000000000000 |

Use cast --to-wei <value> eth to convert the value to wei to avoid mistakes.

Upgrade Command

Dry run the upgrade command and print the calldata for upgrade call:

DRY_RUN=true just upgrade-fault-dispute-game

Run the upgrade command (requires PRIVATE_KEY in .env.upgrade):

DRY_RUN=false just upgrade-fault-dispute-game

Verification

You can verify the upgrade by running the following command:

cast call <FACTORY_ADDRESS> "gameImpls(uint32)" <GAME_TYPE> --rpc-url <L1_RPC_URL>

Troubleshooting

Common issues and solutions:

-

Compilation Errors:

- Run

cd contracts && forge clean

- Run

-

Deployment Failures:

- Check RPC connection

- Verify sufficient ETH balance

- Confirm environment variables are set correctly

Running OP Succinct Lite

Fault Proof Proposer

The fault proof proposer is a component responsible for creating and managing OP-Succinct fault dispute games on the L1 chain. It continuously monitors the L2 chain and creates new dispute games at regular intervals to ensure the validity of L2 state transitions.

Prerequisites

Before running the proposer, ensure you have:

- Rust toolchain installed (latest stable version)

- Access to L1 and L2 network nodes

- The DisputeGameFactory contract deployed (See Deploy)

- Sufficient ETH balance for:

- Transaction fees

- Game bonds (configurable in the factory)

- Required environment variables properly configured (see Configuration)

Overview

The proposer performs several key functions:

- State Synchronization: Maintains a cached view of the dispute DAG, anchor pointer, and canonical head used to schedule new work.

- Game Creation: Proposes new games when the finalized L2 head advances past the configured interval.

- Game Defense: Spawns proof-generation tasks for challenged games, reusing the same proving pipeline as fast finality mode when enabled.

- Game Resolution & Bonds: Resolves games the proposer created or proved once eligible and claims credit from finalized wins before pruning them from the cache.

Configuration

The proposer is configured through environment variables.

Create a .env.proposer file in the fault-proof directory with all required variables. This single file is used by:

- Docker Compose (for both variable substitution and runtime configuration)

- Direct binary execution (

cargo run --bin proposerfrom thefault-proofdirectory; the binary automatically loads.env.proposer)

Required Environment Variables

| Variable | Description |

|---|---|

L1_RPC | L1 RPC endpoint URL |

L2_RPC | L2 RPC endpoint URL |

ANCHOR_STATE_REGISTRY_ADDRESS | Address of the AnchorStateRegistry contract |

FACTORY_ADDRESS | Address of the DisputeGameFactory contract |

GAME_TYPE | Type identifier for the dispute game |

NETWORK_PRIVATE_KEY | Private key for the Succinct Prover Network. See the Succinct Prover Network Quickstart for setup instructions. (Set to 0x0000000000000000000000000000000000000000000000000000000000000001 if not using fast finality mode) |

For transaction signing, the following methods are supported:

| Method | Description |

|---|---|

| Local wallet | Sign transactions using a private key stored locally |

| Web3 wallet | Sign transactions using an external web3 signer service |

| Google HSM | Sign transactions using Google Cloud Hardware Security Module |

Depending on the one you choose, you must provide the corresponding environment variables:

Local wallet

| Variable | Description |

|---|---|

PRIVATE_KEY | Private key for transaction signing (if using private key signer) |

Web3 wallet

| Variable | Description |

|---|---|